Wikidata and Wikibase as complementary research services for cultural heritage data

This is the second instalment of a 2-part blog post regarding the use of Wikidata and Wikibase as research infrastructure. You can read the first part here.

Having previously introduced differences between Wikidata and individual Wikibases it is worth delving into the relative strengths and weaknesses of each system, and presenting a hybrid model for how they may be used cooperatively as an integrated ecosystem.

The primary strength of Wikidata is currently its size, both in relation to coverage of subject area and also the sheer number of data points. The fact that it exists at all feels somewhat miraculous, leading to the Wikimedia observation that it “only works in practice. It could never work in theory”.

This growth has been enabled by providing a relatively low barrier of entry for involvement, with no impediment to anyone with internet access having the ability to edit production data [1]. This lack of limitation is not only applied to human users, as there are many resources for setting up discrete editing “bots” which amend data based on preconfigured logic, and which can be authenticated to operate autonomously based on a nominal history of user involvement.

An inherent issue with the wide reach of Wikidata is that finite infrastructure resources mean that it is not possible to incorporate highly granular data for every represented field. This contributes to preventing adoption as a primary research platform, as it is precisely this detail which would make it a more attractive proposition for many researchers. The solution to this situation is a model which maintains Wikidata as an umbrella service, linking together a family of independently maintained Wikibases.

The drawbacks of an open editing policy

As previously mentioned, there are almost no impediments to editing Wikidata, as a deliberate means of encouraging community involvement [2]. An effect of this is that there is no inherent preference given to a user’s expertise in a specific area, which means that a domain expert could find their contribution overwritten by a user with only a cursory knowledge of the subject. There are also no mechanisms to assert authority based on access to physical evidence (relevant to cultural institutions, access to the artefacts themselves) or first-hand experience [3]. The combination of these factors makes an institution’s decision to contribute and/or rely on Wikidata a complex one, as they could easily find themselves in the role of “digital gardener“, not just contributing data but having to maintain their statements from alteration.

There are also some general issues around citation on the platform. There exists a well supported method for applying a reference link to any statement, most often as a web link to a secondary source which supports the claim. This is however not a mandatory attribute, so many statements are simply presented “as-is” without any justification or means of verification. The heavy reliance on web links also means that “link rot” is a concern.

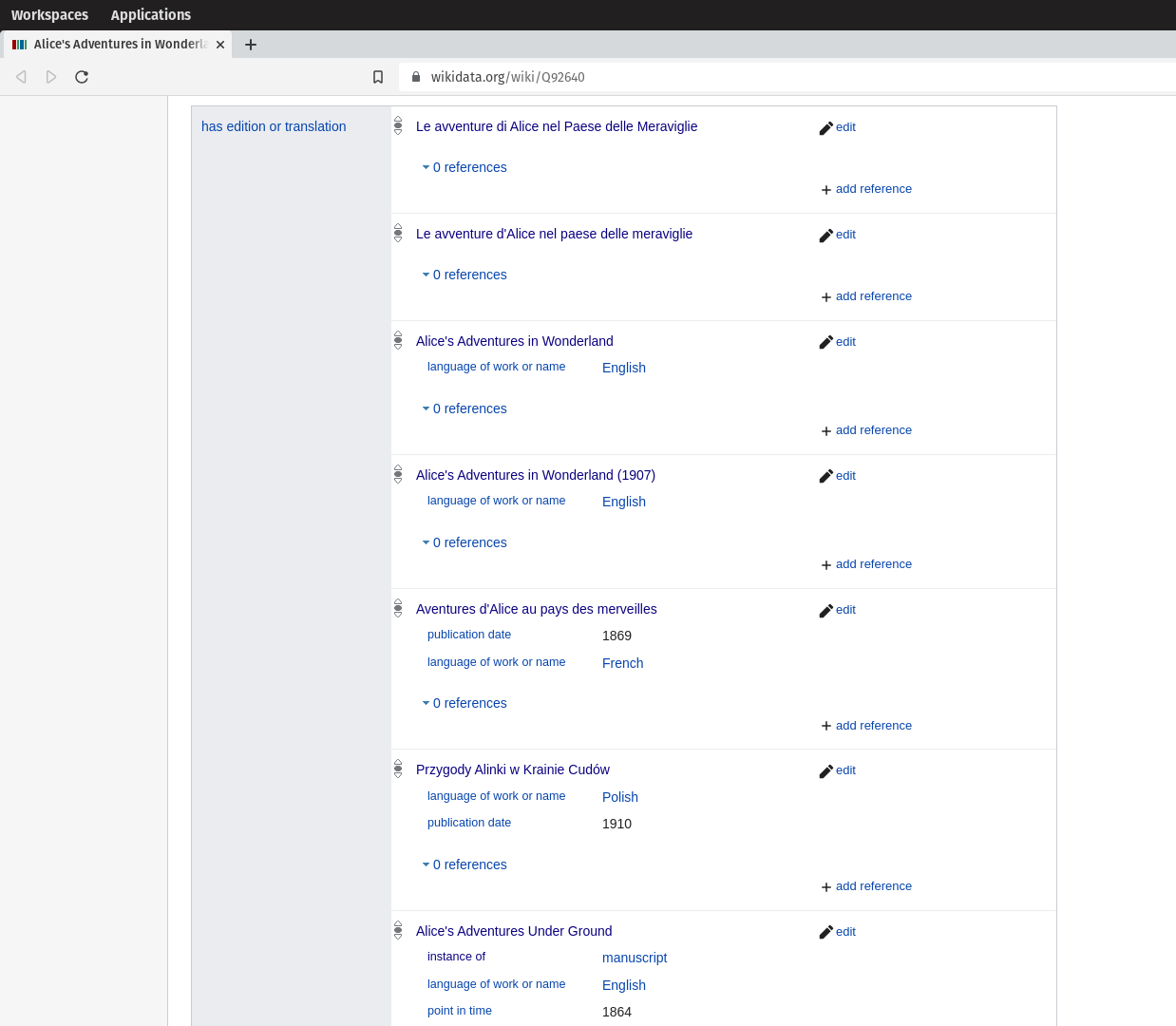

A further problem with an open editing policy relates to adherence to schemas and ontologies. Wikidata was conceived with an inherently flat structure due to its primary function as facilitating data exchange between different language Wikipedia resources, which resulted in an initial one-to-one relationship between the two platforms. Increasingly there has been movement to define multi-tiered structures for cultural data (such as WikiProject Books), but there are some issues which can be best illustrated with some analysis of data related to literature.

Contemporary models for cataloguing (for example FRBR, BIBFRAME or FIAF) rely on structures generally involving at least three tiers. This begins with a “work” or “expression” to represent the artwork as an abstract entity, a “manifestation” or “edition” for each interchangeable batch of physical material, and an “item” to represent an individual physical artefact. This is relatively easy to understand in relation to books. Alice’s Adventures in Wonderland is an artistic “work”, individual ISBNs [4] delineate different “editions“, and an “item” is a physical copy sitting on a shelf. It is unreasonable to expect individual “items” to be represented in Wikidata unless they are especially notable [5], although it is worth observing that some disciplines place a great deal of importance on a specific item being identified [6]. However the predominant tension arises from attempting to enforce the “work” and “edition” levels of the schema as distinct ontological elements on a platform not initially intended to facilitate such structures.

EntitySchemas, and their incorporation into the Cradle data contribution tool are a positive step towards enforcing some structure into what is otherwise unrestricted data modelling [9]. These systems work by identifying key mandatory properties which must be applied to entities of a given type, or require their use at the point of creation [10].

Granularity and specificity

A solution to many of the problems outlined above would be a decentralised ecosystem, with Wikidata maintaining higher level identifiers connected to a collection of Wikibases, each containing a vast expansion of detail for a given subject area. In this model Alice’s Adventures in Wonderland could remain as a single entity on Wikidata (the “work”), connected with other Wikibases which wish to link their resources (most obviously in this instance, Wikibases run by libraries).

An example of this model relevant to film studies would be to imagine a Wikibase devoted entirely to the life and work of French filmmaker Agnès Varda. At present her representation on Wikidata amounts to a total of only around 200 data statements (mostly identifiers linking to other resources), but it is easy to imagine a much larger corpus of data including enhanced bibliographic detail [11], semantic annotation of her writings, or machine analysis of her films [12]. Linking the relevant crossover entities (individuals and works) between the two systems enables the ability to construct federated queries which start at the top level and reach down into respective domain-specific knowledge repositories [13].

An example of such a federating query could be the average shot length for French new-wave filmmakers. This is not a metric currently recorded on Wikidata, and if it were it would be stated simply as an integer. Instead we are describing a query which retrieves granular shot information from associated Wikibases via shared identifiers and performs analysis in a transparent and verifiable process, with the possibility for increasingly involved analysis (e.g. Are there more cuts at the beginning or end of these films? Did cutting speed increase or decrease over time?).

Restricting edit access, or leveraging user-based rollback functions would allow institutions to steward autonomous Wikibases as being representative of their knowledge in a given domain, while also maintaining and policing adherence to a defined data model.

Hybridised knowledge

An example of transforming this theory into a tangible outcome is the minimum viable product (MVP) [14] developed by the Open Science Lab for NFDI4Culture. This project demonstrates the ability to create a dedicated Wikibase to house extremely specific metadata, in this case annotations related to 3D-model representations of physical objects and locations of historical significance. The data model intentionally features significant overlap with Wikidata properties, to facilitate federated querying. Furthermore, the placement on institutional infrastructure means that ultimately the organisation has complete control over the data represented on the platform, and controls all contributions.

Outlook

The ability to deploy Wikibase is becoming increasingly easier given a focus on providing containerised packages and installation templates, with community engagement and growth being a goal explicitly stated in the Wikimedia Linked Open Data strategy. As with all ecosystems, the likelihood of this model being fully realised is dependent on adoption, and the willingness of relevant organisations to engage with this vision. The phase we are currently in is an exciting one, in which potential can be explored and experimentation can show what could be available to researchers in the future.

Notes

- „Production“ is used here in the sense defined by the Agile development model.

- Wikidata’s vandalism strategy is mostly reparative, not preventative, relying on extensive versioning information to rollback undesirable edits.

- As Philip Roth discovered when he attempted to update a Wikipedia page for a book of which he was the author.

- ISBNs indeed provide a rare example where a URI is already “minted”, attached to a physical item and ready to be reused as a persistent identifier for that edition.

- One of few examples of an “item” level book entity is the Lincoln Bible.

- There is surprising variety amongst extant artefacts of early cinema, in many cases due to distinct colouring or modification processes performed on individual prints.

- The Wikidata page for Alice’s Adventures in Wonderland only lists 17 editions. OpenLibrary lists 1,271 and GoodReads lists 12,397.

- This edit of Drive Your Plow Over the Bones of the Dead shows where an “edition” has been conflated with a “work”, causing a self-reflexive triple: Q11827997 -> edition or translation of (P629) -> itself.

- An attempt at creating a completely nonsensical edit by the author can be found here: Galore (a film) -> has eye colour -> a hat.

- The Cradle template for “actor” expects at minimum mandatory input for a “gender”, “occupation” as “actor” and “instance of” “human”.

- The Wikipedia entry for Varda contains many bibliographic details not reflected in her Wikidata entry.

- Filmbase is an early prototype for automatically extracting metadata from a digital representation of a film using FOSS software and populating a dedicated Wikibase.

- The Rhizome endpoint contains examples of federated searches which draw upon their own data, combined with bibliographic data from Wikidata.

- Rossenova, Lozana. (2021, October 29). Semantic annotation for 3D cultural artefacts: MVP. Zenodo. https://doi.org/10.5281/zenodo.5628847

0 Antworten auf “Wikidata and Wikibase as complementary research services for cultural heritage data”