Usability tests in practice – a progress report of the ROSI project

by Grischa Fraumann and Svantje Lilienthal

diesen Beitrag auf Deutsch lesenHow do you find out whether a developed application can be used well by users? Asking the users directly seems like an obvious possibility to answer this question.

Within the ROSI project (Reference Implementation for Open Scientometric Indicators) we conducted usability tests to test our developed software application. In this blog post, we would like to give an insight into our approach and our experiences to encourage other projects to use a similar approach. We briefly introduce the project, then summarise the usability test procedure and conclude with some recommendations.

The Project

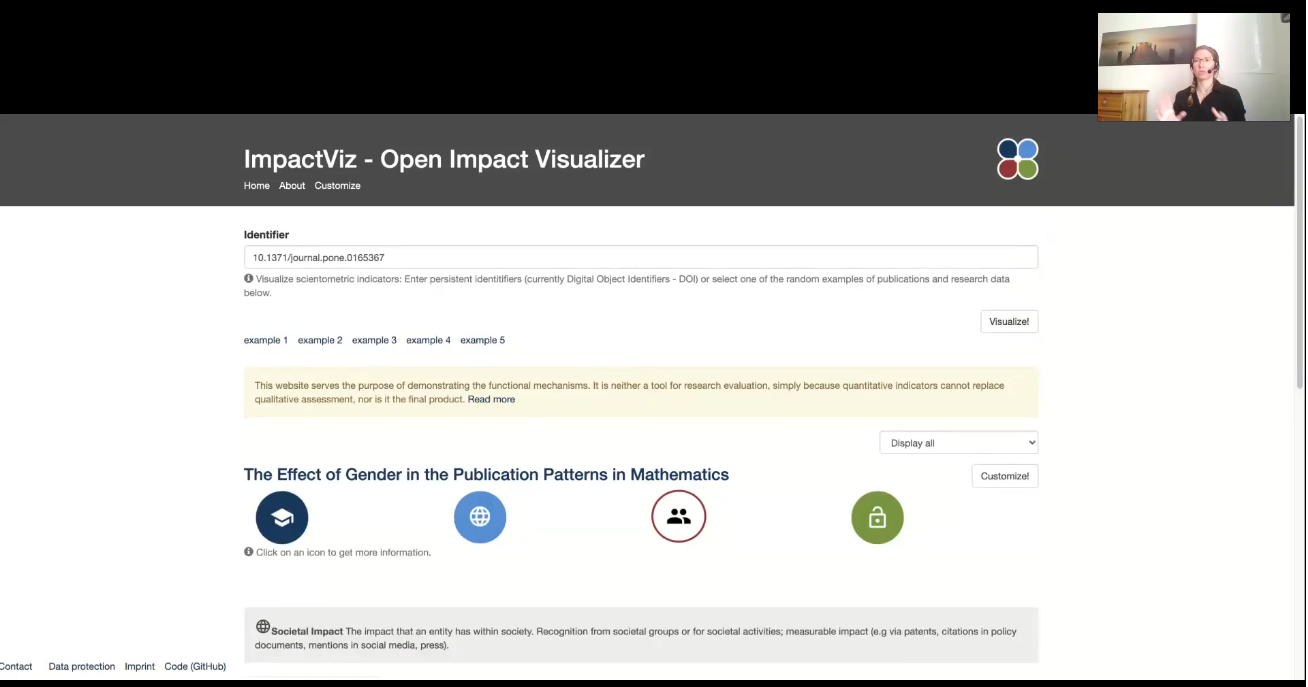

The ROSI project is about user-centered development of a prototype for the customizable representation of open scientometric indicators (e.g. citations or mentions of scientific publications in Wikipedia). Further information about the motivation of the project and the directory of scientometric data sources can be found in separate blog posts. For this purpose, potential users were interviewed in interviews and workshops and a web application (blog post on the prototype) was developed in parallel. This iterative approach enabled several versions in which the software could be continuously improved through user feedback. This allows a consideration of user needs and a targeted development of the software. The usability tests mentioned above are also an important element of this process. Here, users test the developed application under supervision and speak out their thoughts aloud when testing the software (so-called Think Aloud Testing).

Procedure

We planned a total of four online interviews with different participants – one of them a test interview – to test the technology and processes in advance. In the following, we briefly describe the individual phases: Planning, implementation and evaluation.

Planning

Through the interviews and workshops carried out in advance as part of the project, we were able to access a large number of potential participants. In addition, we were able to benefit from the experiences from other TIB projects, as we were able to participate in the evaluation of the Europeana Enhanced Unified Media Player, among others. We defined the interview questions and tasks in advance and adjusted them again after the test interview. The participants were then invited personally by e-mail.

Implementation

The online interviews were conducted by two of us and we divided the tasks: Moderation and taking notes (sometimes alternating). The interviews usually lasted 60 minutes, although some were slightly shorter, depending on how the participants answered the open questions. We used the WebEx tool and asked the participants to share their screen in the application and to turn on their camera.

At the beginning of the interview, the project and the goals of the test were briefly introduced, and the users had time to test the application by themselves. Afterwards, some concrete tasks were assigned to them. For example, the users had to find out which data source is associated with a certain indicator. Finally, some open questions were asked to find out the users‘ opinion (e.g. whether they had any further suggestions for improving the visualization).

Evaluation

The users were able to test the various functions of the prototype as far as possible without any problems, some ambiguities occurred in only one of the use cases. Another important feedback was the wish for comprehensive explanations of the contents and functions of the prototype. The free test at the beginning made it much easier for the users to get started with the interview and in some cases made it easier to complete the tasks set.

Lessons Learned and Conclusion

What would we recommend to similar projects?

- It is worthwhile to participate in similar evaluations in advance as participants to improve your own usability tests.

- The procedure, questions and tasks should be tested in a test interview beforehand. Here it is also particularly important to test the technology very thoroughly.

- It is easier to divide the tasks (moderation and taking notes) between two people during the interview.

- Users are very different and have, for example, different levels of technical knowledge. Therefore, it is an advantage to know how experienced the users are. If necessary, the users can also receive assistance in solving the tasks.

An on-site evaluation might have taken more time. Overall, the results of the online interviews were very helpful and the effort was reasonable.

I am a member of the IBID research group (Information Behavior and Interaction Design) at the Department of Communication. Before joining the University of Copenhagen, I worked as a Research Assistant at TIB Leibniz Information Centre for Science and Technology. I was also a Visiting Researcher at the Centre for Science and Technology Studies (CWTS), Leiden University.